S&T evaluatology

The objective of science and technology (S&T) evaluation is to identify the most influential achievements from the vast array of global S&T achievements. At present, S&T evaluation primarily relies on bibliometrics, which ranks top scientists and their achievements based on the number of publication numbers and citation counts. However, bibliometrics has three major limitations that hinder its ability to recognize the most impactful achievements:

- Publication numbers and citation counts are easily influenced by various confounding factors, such as differences in disciplines and the reputation of researchers.

- It ignores significant technological achievements that have not yet been formally published, such as MySQL, Linux operating system, and Git.

- An over-reliance on quantitative metrics often leads to neglecting the quality of the achievements themselves.

To overcome these limitations, the International Open Benchmark Council (BenchCouncil) has developed S&T evaluatology [2] based on evaluatology framework [1]. This methodology addresses the influence of confounding factors by establishing extended evaluation condition (EC), which allow for objective comparison of numerous achievements. It also builds a real-world S&T evaluation system (ES) that encompasses the complete collection of S&T achievements, ensuring that unpublished outputs are not overlooked. Furthermore, this approach defines four relationships to clarify the connections between different S&T achievements and uses a four-round filtering rule to prune non-significant achievements and ensure the quality of selected achievements.

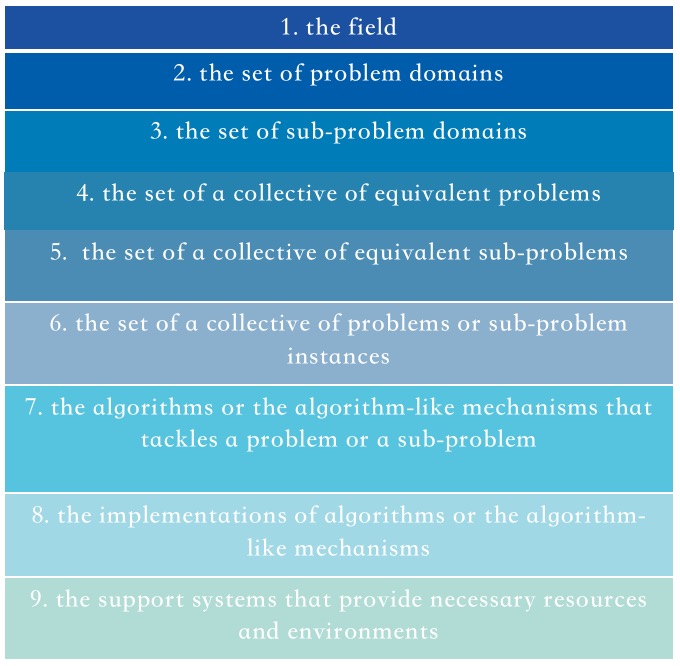

At the core of S&T evaluatology is the concept of extended EC, as shown in Figure 1. Zhan et al.[2] define the extended EC, which consists of nine key components, arranged as follows: “(1) the field that can be broken down into several problem domains; (2) The set of problem domains, each of which can be broken down into various sub-problem domains; (3) the subproblem domains, each of which can be decomposed into several problems; (4) the set of a collective of equivalent problems, each of which can be broken down into multiple sub-problems; (5) the set of a collective of equivalent subproblems; (6) the set of a collective of problems or subproblem instances; (7) the algorithms or the algorithm-like mechanisms that tackles a problem or a sub-problem; (8) the implementations of algorithms or the algorithm-like mechanisms; (9) the support systems that provide necessary resources and environments”.

Figure 1: The Overview of an Extended EC[2].

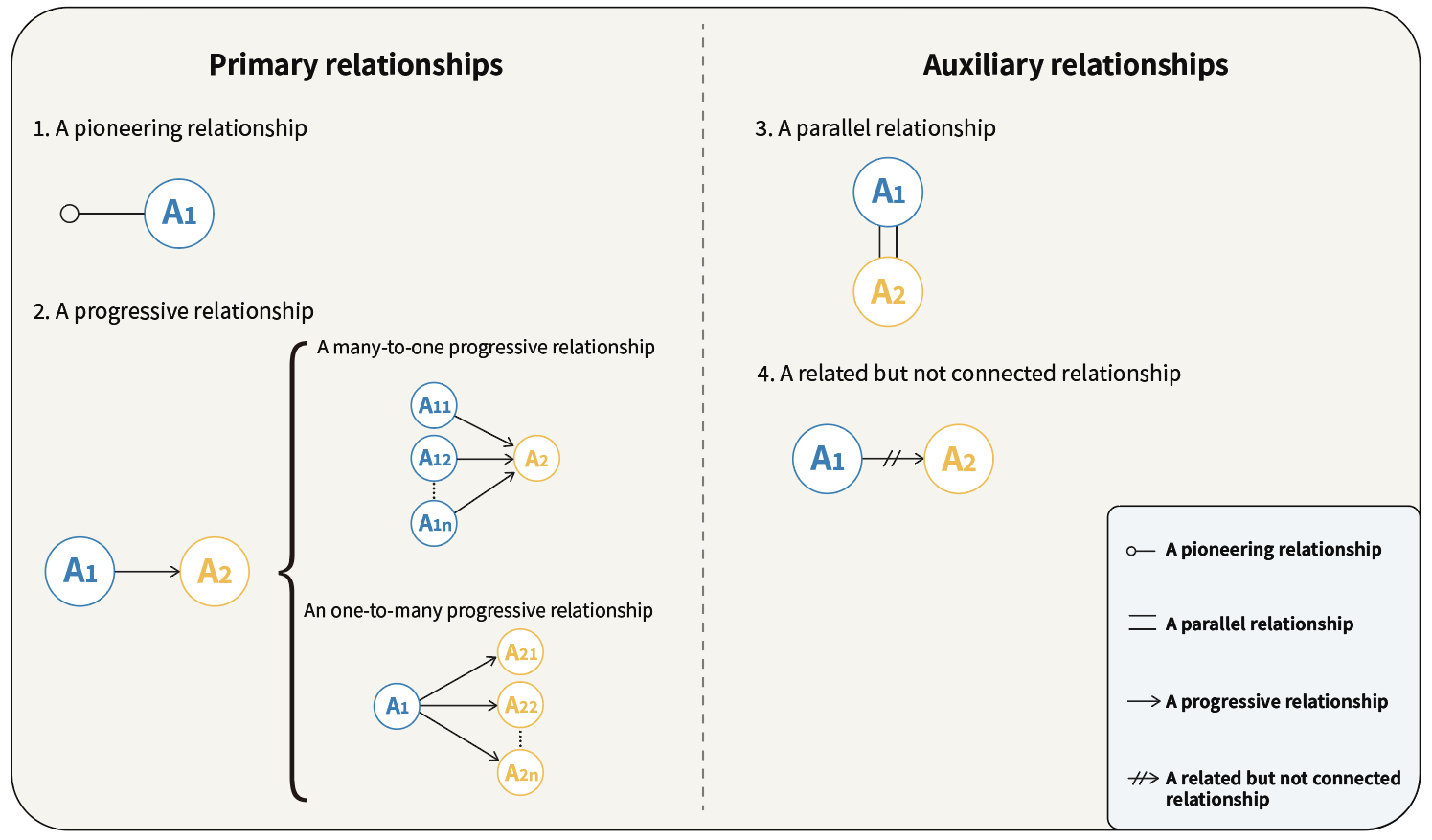

Each S&T achievement can be mapped to different components of the extended EC. Based on the temporal and citation links, the S&T evaluatology defines two primary relationships and two auxiliary relationships, as shown in Figure 2. The fundamental relationships include the pioneering and progressive relationships, while the auxiliary relationships are the parallel and "related but not connected" relationships. These four relationships help to elucidate the connections between different achievements.

Figure 2: Two fundamental relationships and two auxiliary relationships among the S&T achievements[2].

The S&T evaluatology establishes a real-world S&T ES that encompasses the complete collection of S&T achievements, mapping each achievement to multiple components of the extended EC. For example, the Reduced Instruction Set Computing (RISC) architecture is mapped to the first six levels of the extended EC. Additionally, the S&T evaluatology constructs a perfect evaluation model (EM) that mirrors the real-world system. When all achievements belong to the same research field, the perfect EM can track the evolution of these achievements based on the four relationships, and it applies the four-round filtering rules to prune low-quality achievements. This process ultimately produces a collection of top S&T achievements within a specific field during a particular time frame.

For a comprehensive explanation of the S&T evaluatology proposed by the International Open Benchmark Council (BenchCouncil), with a specific focus on its application in the chip technology field, please refer to the following video:

In conclusion, this S&T evaluatology effectively addresses the shortcomings of bibliometrics, accurately identifying the top N S&T achievements in a specific field and timeframe. Additionally, it provides essential guidance for the establishment of the ETRanking, which aims to benchmark the top 500 pioneers across 500 emerging technologies.

References

[1]Zhan, J., Wang, L., Gao, W., Li, H., Wang, C., Huang, Y., ... & Zhang, Z. (2024). Evaluatology: The science and engineering of evaluation. BenchCouncil Transactions on Benchmarks, Standards and Evaluations, 4(1), 100162.

[2]Kang, G., Gao, W., Wang, L., Luo, C., Ye, H., He, Q., ... & Zhan, J. (2024). Could Bibliometrics Reveal Top Science and Technology Achievements and Researchers? The Case for Evaluatology-based Science and Technology Evaluation. arXiv preprint arXiv:2408.12158.