Summary

Due to increasing amounts of data and compute resources, deep learning achieves many successes in various domains. To prove the validity of AI, researchers take more attention to train more accurate models in the early days. Since many models have achieved pretty accuracies in various domains, the applications of AI to the end-users are put on the agenda. After entering the application period, the inference becomes more and more important.

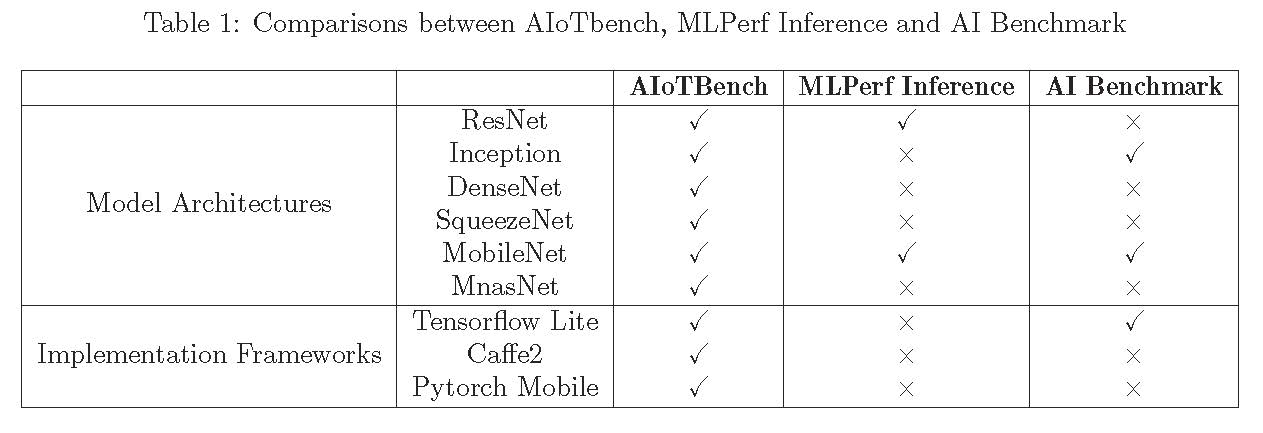

With the popularity of smart phones and internet of things, researchers and engineers have made effort to apply the inference on the mobile or embedded devices more efficiently. Neural networks are designed more light-weight by using simpler architecture, or by quantizing, pruning and compressing the networks. Different networks present different trade-offs between accuracy and computational complexity. These networks are designed with very different philosophies and have very different architectures. Beside of the diversity of models, AI frameworks on mobile and embedded devices are also diverse, e.g. Tensorflow Lite, Caffe2, Pytorch Mobile. On the other hand, the mobile and embedded devices provide additional hardware acceleration using GPUs or NPUs to support the AI applications. Despite the boom of AI technologies from different levels, there is a lack of benchmark that can comprehensively compare and evaluate the representative AI models, frameworks, and hardwares on mobile and embedded environment.

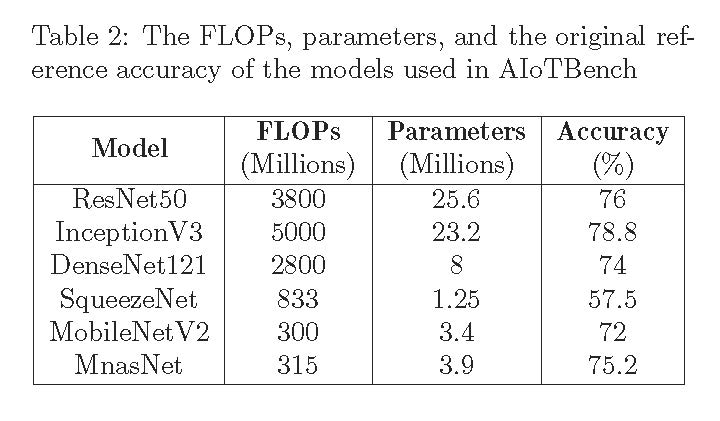

We propose a benchmark suite, AIoTBench, which focuses on the evaluation of the inference ability of mobile and embedded devices. Considering the representative and diversity of both model and framework, AIoTBench covers three typical heavy-weight networks: ResNet50, InceptionV3, DenseNet121, as well as three light-weight networks: SqueezeNet, MobileNetV2, MnasNet. Each model is implemented by three popular frameworks: Tensorflow Lite, Caffe2, Pytorch Mobile. For each model in Tensorflow Lite, we also provide three quantization versions: dynamic range quantization, full integer quantization, float16 quantization. Our benchmark can be run out of box to make comprehensive comparisons by the end-users and the designers of algorithm, software and hardware. To compare and rank the AI capabilities of the devices, we propose a unified metrics as the AI score, Valid Images Per Second (VIPS), which reflects the trade-off between quality and performance of the AI system.

Our benchmark can be used for: 1) Comparison of different AI models. Users can make a tradeoff between the accuracy and the speed depending on the application requirement, since different models have really different accuracies and performances across different platforms. 2) Comparison of different AI frameworks on mobile environment. Depending on the model and the device, different frameworks have different performance. Users can make comparisons between the AI frameworks on mobile devices. 3) Benchmarking and ranking the AI abilities of different mobile devices. With diverse and representative models and frameworks, the mobile devices can get a comprehensive benchmarking and evaluation.

Benchmark Design

Considering the representative and diversity of both model and framework, AIoTBench is an off-the-shelf benchmark suite with the particular implementations. It can be run out of box to make comprehensive comparisons by the end-users and the designers of algorithm, software and hardware.

- Task and Dataset

Currently, AIoTBench focuses on the task of image classification. A classifier network takes an image as input and predicts its class. Image classification is a key task of pattern recognition and artificial intelligence. It is intensively studied by the academic community, and widely used in commercial applications. The classifier network is also widely used in other vision tasks. It is a common practice that pre-training the classifier network on the ImageNet classification dataset, then replacing the last loss layer and fine-tuning according to the new vision task by transfer learning. The pre-trained network is used as the feature extractor in the new vision task. For example, the object detection and segmentation often use the pre-trained classifier networks as the backbone to extract the feature maps.

ILSVRC (ImageNet Large Scale Visual Recognition Challenge) 2012 datase is used in our benchmark. The original dataset has 1280000 training images and 50,000 validation images with 1000 classes. We focus on the evaluation of the inference of AI models, thus we just need the validation data. Running the models on the entire validation images on mobile devices takes too long time. So we randomly sample 100 classes, and 5 images per class from the original validation images. The final validation dataset we use in our benchmark have 500 images totally. - Model Diversity

Although AlexNet plays the role of the pioneer, it is rarely used in modern days. Considering the diversity and popularity, we choose three typical heavy-weight networks: ResNet50, InceptionV3, DenseNet121, as well as three light-weight networks: SqueezeNet, MobileNetV2, MnasNet. These networks are widely used in the academia and industry. Table 2 presents the FLOPs, parameters, and the original reference accuracy of the selected models.

-

Framework Diversity

For the mobile and embedded devices, the framework, with which the models are implemented, is also part of the workload. Beside the diverse models, there are also diverse AI frameworks in the community. They are supported by different business giants, and have a large number of users. AIoTBench covers three popular and representative frameworks: Tensorflow Lite, Caffe2, Pytorch Mobile. -

AI Score

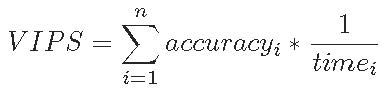

Distilling the AI capabilities of the system to a unified score enables a direct comparison and ranking of different devices. We propose a unified metrics as the AI score: Valid Images Per Second (VIPS).

The inverse proportion to the average running time reflects the throughput of the system. Since the trade-off between quality and performance is always the consideration in AI domain, the accuracy is used as the weight coefficient to compute the final AI score.

Benchmark Implementation

Currently, we have developed the Android version of AIoTBench. It contains four modules:

1) Configure module. Users can configure the path of model file and the path of dataset. The preprocess parameters are configured by a file. We have provided the dataset, the prepared models, and the corresponding preprocess configurations in the default path. It is convenient to add a new model. Users just need i) prepare the model file and put in the path of model, ii) add the preprocess settings of this model in the configure file.

2) Preprocess module. This module reads and preprocesses the images.

3) Predict module. Since different frameworks have different API for the inference, we abstract two interfaces and implement them for three frameworks: Tensorflow Lite, Caffe2, Pytorch Mobile.

The interface prepare() loads and initializes the model, and infer() execute the model inference. It is also convenient to add a new framework. Users just need implement these two interfaces using the API of the new framework.

4) Score module. The accuracy and inference time of each testing are logged, and the final AI score is computed.

Contributors

Prof. Jianfeng Zhan, ICT, Chinese Academy of Sciences, and BenchCouncil

Chunjie Luo, ICT, Chinese Academy of Sciences

Xiwen He, ICT, Chinese Academy of Sciences

Xinke Zhao, ICT, Chinese Academy of Sciences

Dr. Wanling Gao, ICT, Chinese Academy of Sciences

Dr. Lei Wang, ICT, Chinese Academy of Sciences

Ranking

AIoTBench results are released.

License

AIoTBench is available for researchers interested in AI. Software components of AIoTBench are all available as open-source software and governed by their own licensing terms. Researchers intending to use AIoTBench are required to fully understand and abide by the licensing terms of the various components. AIoTBench is open-source under the Apache License, Version 2.0. Please use all files in compliance with the License. Our AIoTBench Software components are all available as open-source software and governed by their own licensing terms. If you want to use our AIoTBench you must understand and comply with their licenses. Software developed externally (not by AIoTBench group)

- TensorFlow: https://www.tensorflow.org

- PyTorch: https://pytorch.org/

- Caffe2: http://caffe2.ai

- Redistribution of source code must comply with the license and notice disclaimers

- Redistribution in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimers in the documentation and/or other materials provided by the distribution.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS “AS IS” AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE ICT CHINESE ACADEMY OF SCIENCES BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.