Edge AIBench 1.0

Edge AIBench is an end-to-end benchmark for intelligent IoT-edge-cloud computing systems. Edge AIBench is the first end-to-end benchmark for intelligent IoT-edge-cloud computing systems, which covers the characteristics of the most intelligent IoT-edge-cloud workloads. Edge AIBench is built on a flexible framework that allows users to customize their required benchmark modules, including four representative scenarios and ten different AI workloads. In this thesis, Edge AIBench is used to evaluate the system on a real IoT-edge-cloud computing system, measuring the strengths and weaknesses of the system in different scenarios.

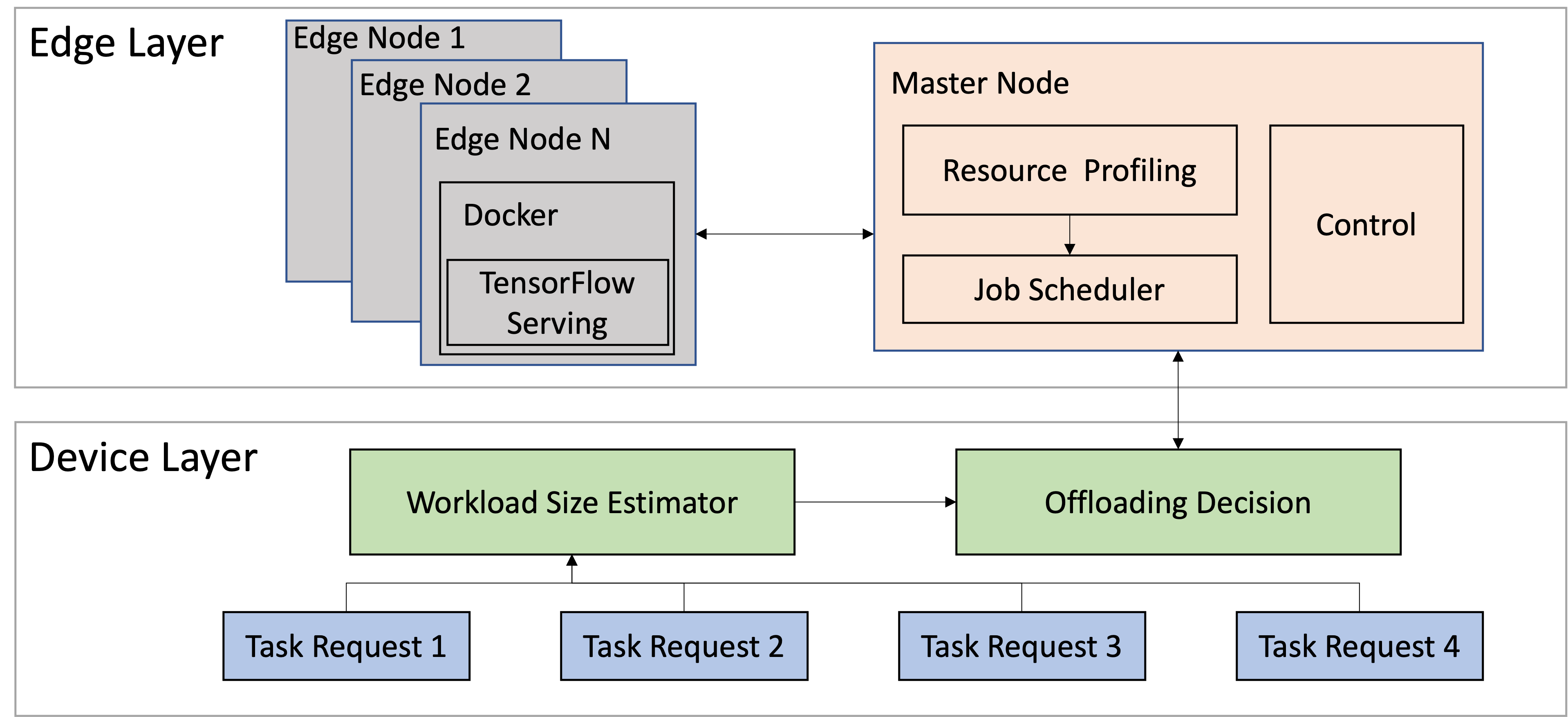

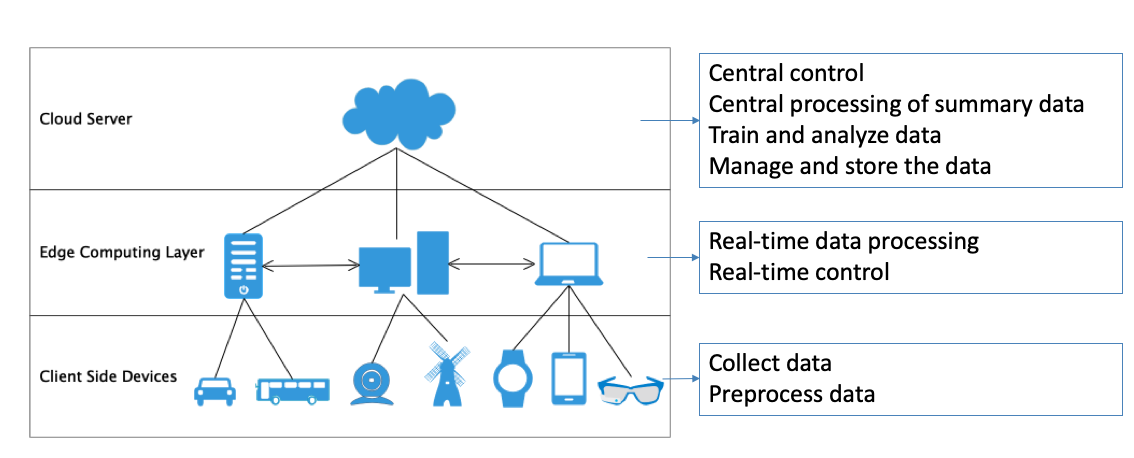

The rapid growth of the number of the client side devices brings great challenges to computing power, data storage, and network, considering the variety and quantity of these devices. The traditional way to solve these problems is the cloud computing framework. Moreover, with the development of 5G network technology, the Internet of Things(IoT) has been another solution. Moreover, edge computing shows a trend of rapid development these years, which combines cloud computing and IoT framework. In the edge computing scenarios, the distribution of data and collaboration of workloads on different layers are serious concerns for performance, security, and privacy issues. So for in edge computing benchmarking, we must take an end-to-end view, considering all three layers: client-side devices, edge computing layer, and cloud servers.

After a general investigation of a set of edge computing applications, we find those applications have a great diversity in many aspects. Thus, we need to consider several operational characteristics these scenarios have.

Moreover, edge cloud computing has recently received great attention from the industry. For this reason, we also consider industrial tasks and requirements. AI tasks are usually involved in edge computing scenarios, such as image classification, face em-bedding, speech recognition, etc.

Therefore, AI applications need to be considered as the represented workloads in our benchmark suite.

Based on the above concerns, we think AI scenari-os are the primary scenarios of edge computing. Ad-ditionally, we summarize six properties of edge AI computing in this section.

To organize our benchmark suite, we adopt a scenario-based benchmark view. The scenario-based idea means extracting primary scenarios which can represent the characteristics. Also, those scenarios need to cover all applications' complexity in edge computing. Edge AIBench is a scenario benchmark considering scenarios' characteristics and typical ap-plications.

In Edge AIBench, we extract four typical scenarios which can represent those characteristics: autonomous vehicle, ICU patient monitor, surveillance camera, and smart home. We think they can represent the complexity of edge computing. In addition, Edge AIBench provides an end-to-end application benchmarking framework, including train, validation and inference stages. Table 1 shows the component benchmarks of Edge AIBench. Edge AIBench provides an end-to-end application benchmarking, consisting of train, inference, data collection and other parts using a general three-layer edge computing framework.

Table 1. The summary of Edge AIBench

| Program Name | Edge AI Scenarios | Models | Datasets | Implementation |

|---|---|---|---|---|

| Lane Detection | Autonomous Vehicle | LaneNet | Tusimple, CULane | Pytorch/Caffe |

| Traffic Sign Detection | Autonomous Vehicle | Capsule Network | German Traffic Sign Recognition | Keras |

| Death Prediction | ICU Patient Monitor | LSTM | MIMIC-III | Tensorflow/Keras |

| Decompensation Prediction | ICU Patient Monitor | LSTM | MIMIC-III | Tensorflow/Keras |

| Phenotype Classification | ICU Patient Monitor | LSTM | MIMIC-III | Tensorflow/Keras |

| Person Re-identification | Surveillance Camera | DG-Net | Market-1501 | PyTorch |

| Action Detection | Surveillance Camera | ResNet18 | UCF101 | PyTorch/Caffe |

| Face Recognition | Smart Home | FaceNet/SphereNet | LFW/CASIA-Webface | Tensorflow/Caffe |

| Speech Recognition | Smart Home | DeepSpeech2 | LibriSpeech | Tensorflow |

ICU Patient Monitor. ICU is the treatment place for critical patients. Therefore immediacy is significant for ICU patient monitor scenario to notify doctors of the patients’ status as soon as possible. The dataset we use is MIMIC-III. MIMIC-III provides many kinds of patients data such as vital signs, fluid balance and so on. Moreover, we choose heart failure prediction and endpoint prediction as the AI benchmarks.

Surveillance Camera. There are many surveillance cameras all over the world nowadays, and these cameras will produce a large quantity of video data at all times. If we transmit all of the data to cloud servers, the network transmission bandwidth will be very high. Therefore, this scenario focus on edge data preprocesses and data compression.

Smart Home. Smart home includes a lot of smart home devices such as automatic controller, alarm system, audio equipment and so on. Thus, the uniqueness of the smart home includes different kinds of edge devices and heterogeneous data. We will choose two AI applications as the component benchmarks: speech recognition and face recognition. These two components have heterogeneous data and different collecting devices. These two component benchmarks both collect data on the client side devices (e.g., camera and smartphone), infer on the edge computing layer and train on the cloud server.

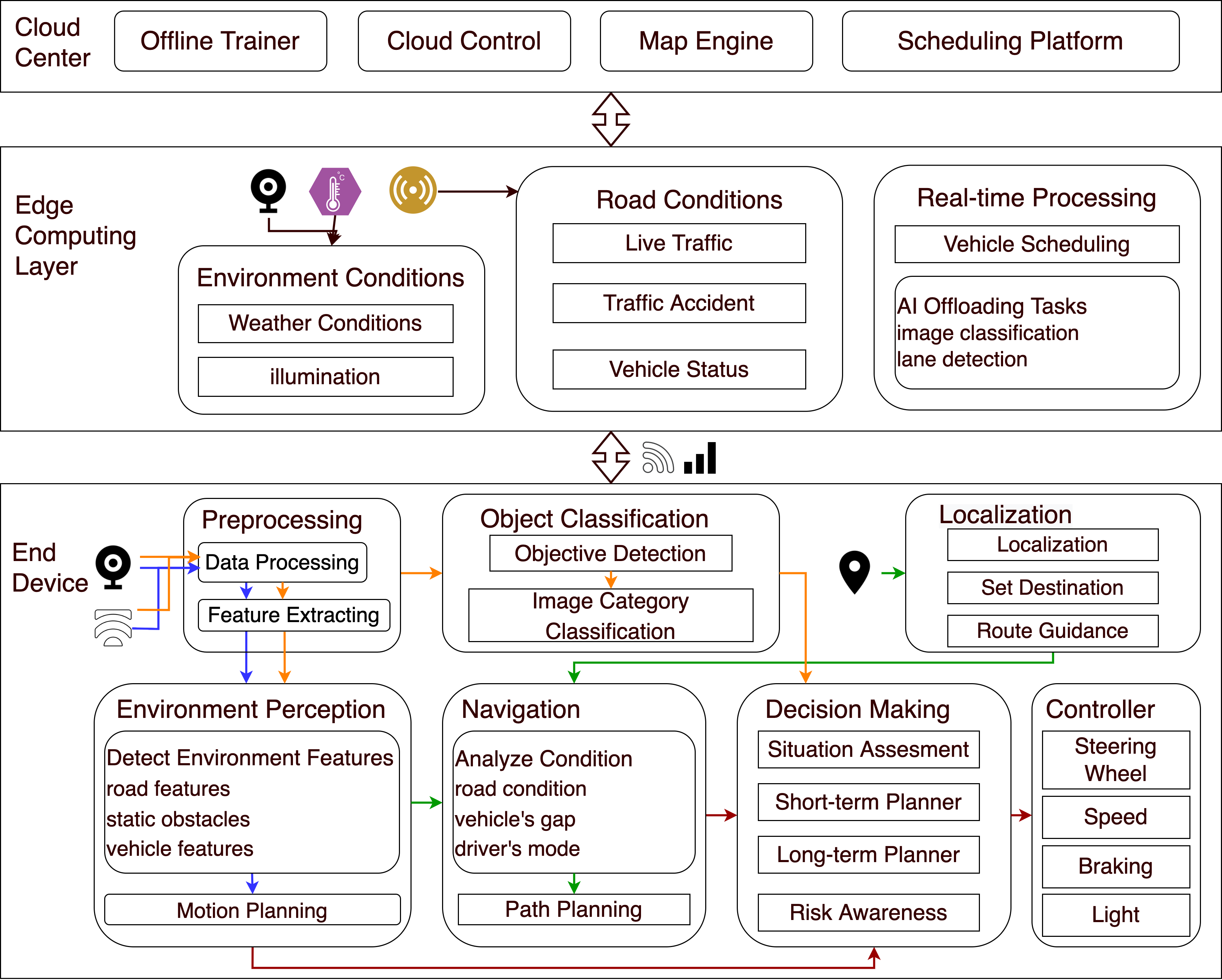

Autonomous Vehicle. The uniqueness of the autonomous vehicle scenario is that the high demand for validity. That is to say, it takes absolute correct action even without human intervention. This feature represents the demand of some edge computing AI scenarios. The automatic control system will analyze the current road conditions and make a corresponding reaction at once. We choose the road sign recognition as the component benchmark.

A Federated Learning Framework Testbed. We have developed an edge computing AI testbed to provide support for researchers and common users, which is publicly available from /testbed.html. Security and privacy issues become significant focuses in the age of big data, as well as edge computing. Federated learning is a distributed collaborative machine learning technology whose main target is to preserve the privacy. Our testbed system will combine the federated learning framework.

Edge AIBench 2.0

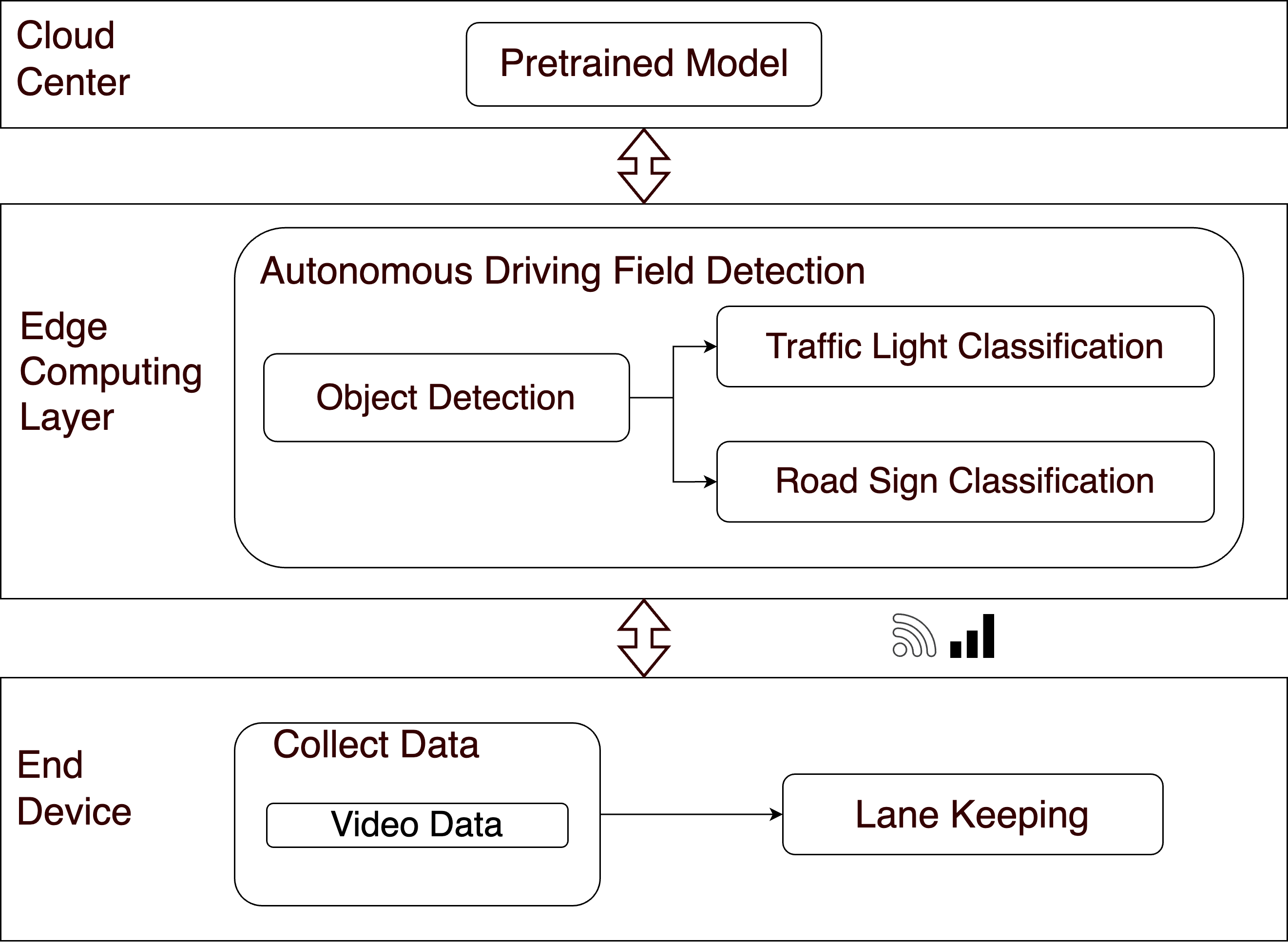

Edge AIBench 2.0 is a scenario benchmark for IoT-Edge-Cloud systems. We propose a set of distilling rules for replicating autonomous vehicle scenarios to extract critical tasks with intertwined interactions. The essential system-level and component-level characteristics are captured while the system complexity is reduced significantly so that users can quickly evaluate and pinpoint the system and component bottlenecks. Also, we implement a scalable architecture through which users can assess the systems with different sizes of workloads.

We used a set of directed acyclic graph (DAG) models to formalize the overall autonomous vehicle's tasks.

Formalizing the whole IoT-Edge-Cloud scenario is very complex. If the scenario benchmark is implemented accordingly, it will generate hundreds of millions of lines of code and a vast amount of data, which is not conducive to users evaluating the system. Therefore, we simplify the autonomous vehicle scenario to extract several interdependent execution modules. We propose a set of distilling rules for autonomous driving tasks based on real-world experience with autonomous driving. And we hope the distilled modules can perform the critical tasks of an autonomous driving system while retaining the complexity and challenge of the system.

1. Retain only representative tasks among those that make use of similar models and serve similar purposes.

In the process of autonomous driving, there are various types of object recognition and detection tasks, which include obstacle recognition, pedestrian recognition, traffic signal recognition, route recognition, etc. Most activities also share similar processing logic and critical path and are typically completed in two steps: detection and classification, with the exception of road route recognition in lane keeping. First, the object's location needs to be detected and localized in the video image, and then classification is performed to complete the recognition of the object. Therefore, we extract the critical traffic signal recognition from these tasks to ensure driving safety and the lane detection task to ensure vehicles obey traffic rules.

2. Prune the tasks executed on the cloud and edge servers and those in parallel with the user-end tasks.

In IoT-Edge-Cloud systems, the user-end devices, edge servers, and servers in the cloud data center execute tasks in parallel and do not affect each other. Therefore, the training and scheduling tasks on the cloud do not affect the vehicle driving process. As a result, we prune this part of the tasks. At the same time, the vehicle analysis and environment perception modules at the edge are also pruned.

3. Prune the modules whose running time is less than 1\% of the total running time.

After the analysis of the real system, the text data transmission latency and the specific processing time of the data collected by sensors, radar, and other devices occupy a very short period of time. The final task decision and control modules, which do not involve AI models, can also be completed in a very short time. Therefore, we will trim these modules.

4. Combine similar tasks that are executed concurrently if possible.

In the traffic signal classification and road sign classification tasks, both have object detection for object localization. Thus, we merge the object detection process in these two modules. The results are sent to the subsequent tasks - traffic signal classification and road sign classification.

5. Remove the route planning module.

Route planning is one of the most critical tasks in autonomous driving. But in creating this scenario benchmark, we remove it because the route planning task does not require real-time image data, and the panning result data transmission time is very short. This task is usually performed on a cloud server in existing real-world environments. The algorithms have been developed very maturely for the route planning task itself, and many advanced online navigation maps are available to users. Baidu's proposed Apollo autonomous driving level navigation~\cite{Apollo} is now in use, reducing the speed of passing vehicles at intersections by 36.8\%. As a result, this module can be trimmed.

6. Remove precedent and subsequent tasks of the pruning module.

After simplifying the overall scenario according to the first five distilling rules, we will re-examine the DAG model and remove any prior or following tasks to the pruning module.

Based on the proposed six distilling rules, we prune the whole DAG model into a simplified DAG model. First, we merge similar modules with the same purpose. Next, we prune the parallel tasks executed on the IoT-Edge-Cloud systems at the same time. Then, we prune the modules that consume a short time, such as preprocessing and decision-making. Next, we combine similar tasks executed concurrently, such as the object classification module. At last, we removed the navigation module and related tasks.

To meet different users' requirements of the scales, we design and implement a scalable benchmark based on the scenario benchmark framework—scalable architecture helps the system allocate resources and workloads.