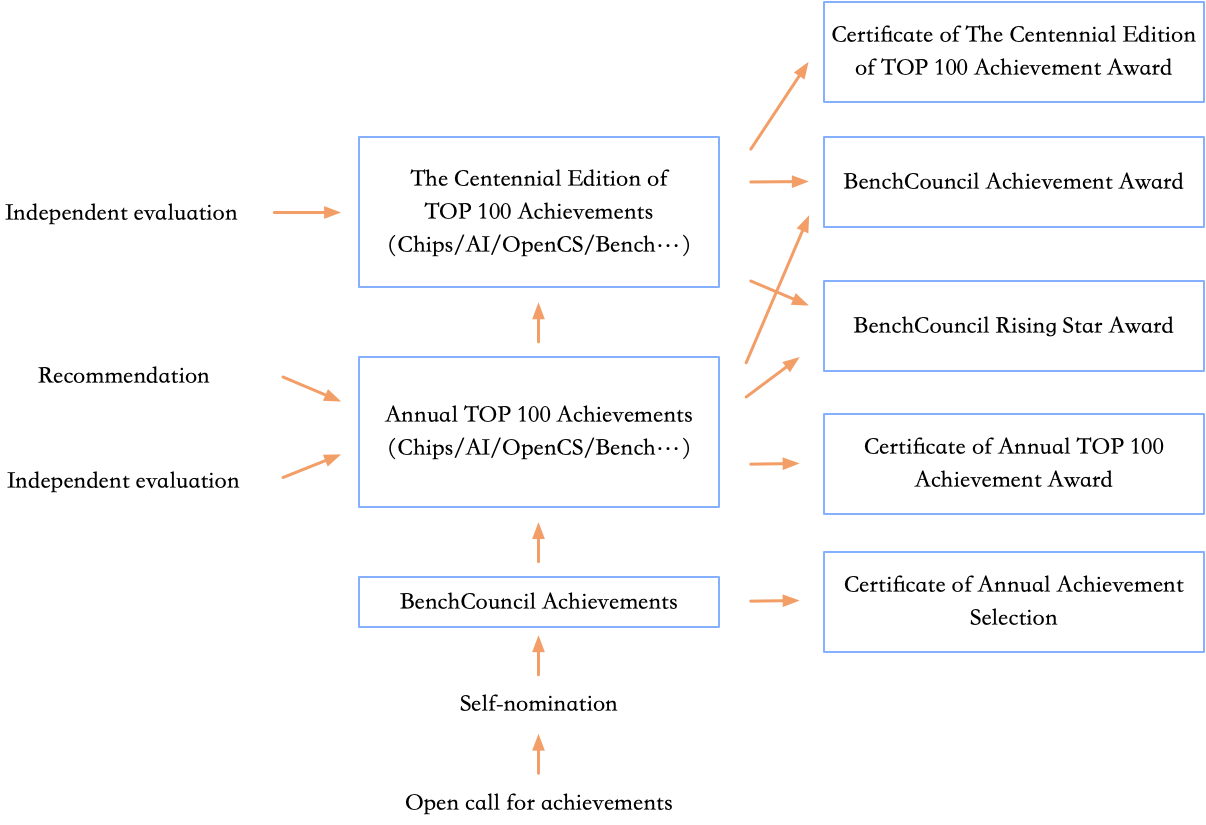

To avoid missing important achievements, BenchCouncil calls for the submission of achievements in the fields of AI, Chips, Open source, benchmarks, and evaluations. After the preliminary evaluation, the submitters of the achievements will have the opportunity to present or showcase their work at the FICC 2023 conference and have a chance to be included in the final rankings.

Ten benefits

1. The submitted achievements will be included in the annual achievement report of the BenchCouncil and will be cited. The submitters need to provide valid citation formats, including paper publications, code repositories, etc. The compiled annual achievement report will be officially published in BenchCouncil Transactions.

2. The submitters of the achievements will receive the certificate of annual achievement Selection. For example, the certificate for the Chip category will be named "the certificate of 2023 BenchCouncil Chip achievement selection." The certificate will display the individual and institutional names.

3. The achievements will be featured on the official English webpages of the BenchCouncil's achievement evaluation (https://www.benchcouncil.org/evaluation/).

4. The official English webpages of the BenchCouncil will provide links to the achievements. The submitters should ensure that the linked content is authentic, non-deceptive, and accurate.

5. The submitters will have the opportunity to attend all four conferences: Chips 2023, OpenCS 2023, Bench 2023, and IC 2023. They will have the chance to engage in extensive exchanges with international peers.

6. The selected achievements will be showcased at the conference venue. The specific time period (generally not exceeding one day) and location need to be coordinated with the conference organizers.

7. The submitters of the achievements have the opportunity to present their work at the conference, subject to further evaluation and arrangement by the conference organizers.

8. Outstanding achievements invited to present at the conference may have the chance to be included in the annual version of the Top 100 achievements ranking (final version) after evaluation by the committee and recognition from peers. The committee has reserved 20 slots for selecting achievements from the submissions.

9. Exemplary achievements that have stood the test of time have the chance to be included in the annual version of the Top 100 achievements ranking (request for comments).

10. The submitters of the achievements can apply for travel funding.

The summary of BenchCouncil awards

Scope of achievements:

In the field of chips, including but not limited to as follows:

system design, logic design, physical design, timing design, verification and simulation, emulators, auxiliary design tools, emerging chips such as superconducting and quantum chips, emerging accelerators, semiconductor materials, lithography technology, circuits, packaging technology.

In the field of artificial intelligence, including but not limited to as follows:

machine learning, reinforcement learning, natural language processing, image analysis, video analysis, data mining, recommendation systems, knowledge representation and reasoning, medical image processing, multimodal information fusion, collective intelligence, intelligent robots and systems, intelligent control, intelligent healthcare, artificial intelligence and astronomy, artificial intelligence and high-energy physics, artificial intelligence and space science, artificial intelligence and transportation, artificial intelligence and oceanography, artificial intelligence and security, artificial intelligence and law, artificial intelligence and finance, artificial intelligence and traditional industries, ethics and governance of artificial intelligence, artificial intelligence and big data, artificial intelligence and materials, artificial intelligence and civil aviation applications, artificial intelligence and business management.

In the field of open source, including but not limited to as follows:

chips, operating systems, containerization and virtualization, compilers, data management, networks, programming languages and compilers, systems and frameworks, basic libraries, monitoring and optimization tools, big data, AI, cloud computing, HPC, blockchain, LLM, autonomous driving, digital twins, Internet of Things, privacy and security, datasets, education.

In the field of benchmark and evaluation, including but not limited to as follows:

econometrics, clinical medical evaluation, drug evaluation, business and financial benchmarks, AI evaluation, HPC benchmarks, database benchmarks, memory benchmarks, network benchmarks, hardware and disk benchmarks, graph benchmarks, human resource evaluation, education evaluation, indicator and index systems.

Process of submitting achievements:

- 1. Please submit the achievements to the email address benchcouncil.evaluation@gmail.com. The materials should not exceed one page and should include a description of the achievements and supporting materials (including achievement links). Please also provide the names of the contributors and contact information.

- 2. After the preliminary evaluation, you will be notified by email within one working day. Please keep the materials concise.

- 3. If you have published papers at Bench 2023 and IC 2023, your achievements will be automatically included after submitting the supporting materials.

- 4. The submitters of the achievements need to sign the conference participation and safety briefing. At least one person is required to register for the conference and showcase the achievements (poster) during a specific time period. Student submitters can register for the conference as students. There are no additional fees for the conference, except for upgrade services.

Evaluation criteria:

- 1. The achievements can be in the form of published or preprint papers, open-source code, products, or services.

- 2. You must submit an English document describing your achievements.

- 3. In order to encourage more participation, the acceptance rate for the achievements should not be lower than 80%.

Registration

After being selected, you must register the conference.

please transfer the cash to the following bank account.

- • Account Name: HONG KONG AI AND CHIP BENCHMARK RESEARCH LIMITED

- • Account Number: 801-670811-838

- • Account Address: 6/F Manulife Place, 348 Kwun Tong Road, Kowloon, Hong Kong.

- • Bank Name: The Hongkong and Shanghai Banking Corporation Limited

- • Bank Address: HSBC Main Building, 1 Queen's Road Central, Central, Victoria City, Hong Kong.

- • SWIFT Code: HSBCHKHHHKH

Also, You can pay using your credit card with following registration link https://eur.cvent.me/5qQEq

1. 1 standard registration for FICC 2023.

- • USD $490.00 before November 25th. USD $550.00 after November 25th.

- • Or 1 simple registration not including paper publication (No food and beverage included)

- • US $320.00 before November 25th. USD $360 after November 25th.

2. Standard-size exhibition space for 1 day.

Furthermore, we offer upgrade services (the registration link is: https://eur.cvent.me/k85BK) for academic or industrial institutions that have been selected for the Top 100 achievements ranking list (AI100, Chip100, Open100, Bench100). Institutions that register for the upgraded service can enjoy additional benefits at the FICC 2023 conference. The number of registrations, duration of plenary session speech, and exhibition space will vary depending on whether you are from academia or industry. Also, we provide customized services upon request.

the default upgrade services are as follows

Academia Upgrade Service:

- • Academia Bronze Service:

- USD $1000.

- 2 free registrations for FICC 2023.

- 20-minute regular session.

- Double standard-size exhibition space for 2 days.

- • Academia Silver Service:

- USD $3000.

- 4 free registrations for FICC 2023.

- 20-minute regular session.

- Double standard-size exhibition space for 4 days.

- 5-page evaluation report. Please visit https://www.benchcouncil.org/evaluation/ for more information.

- • Academia Golden Service:

- USD $4000.

- 4 free registrations for FICC 2023.

- 30-minute primary session.

- Double standard-size exhibition space for 4 days.

- 5-page evaluation report. Please visit https://www.benchcouncil.org/evaluation/ for more information.

- • Academia Platinum Service:

- USD $5000.

- 6 free registrations for FICC 2023.

- 30-minute plenary session.

- Double standard-size exhibition space for 4 days.

- 5-page evaluation report. Please visit https://www.benchcouncil.org/evaluation/ for more information.

Industry Upgrade Service:

- • Industry Bronze Service:

- USD $2000.

- 2 free registrations for FICC 2023.

- 20-minute regular session.

- Double standard-size exhibition space for 2 days.

- • Industry Silver Service:

- USD $4000.

- 4 free registrations for FICC 2023.

- 20-minute regular session.

- Double standard-size exhibition space for 4 days.

- • Industry Golden Service:

- USD $8000.

- 6 free registrations for FICC 2023.

- 30-minute primary session.

- Double standard-size exhibition space for 4 days.

- • Industry Platinum Service:

- USD $10000.

- 8 free registrations for FICC 2023.

- 30-minute plenary session.

- Four standard-size exhibition spaces for 4 days.