| Time | Event | ||

| Saturday, Dec. 2 Registration | |||

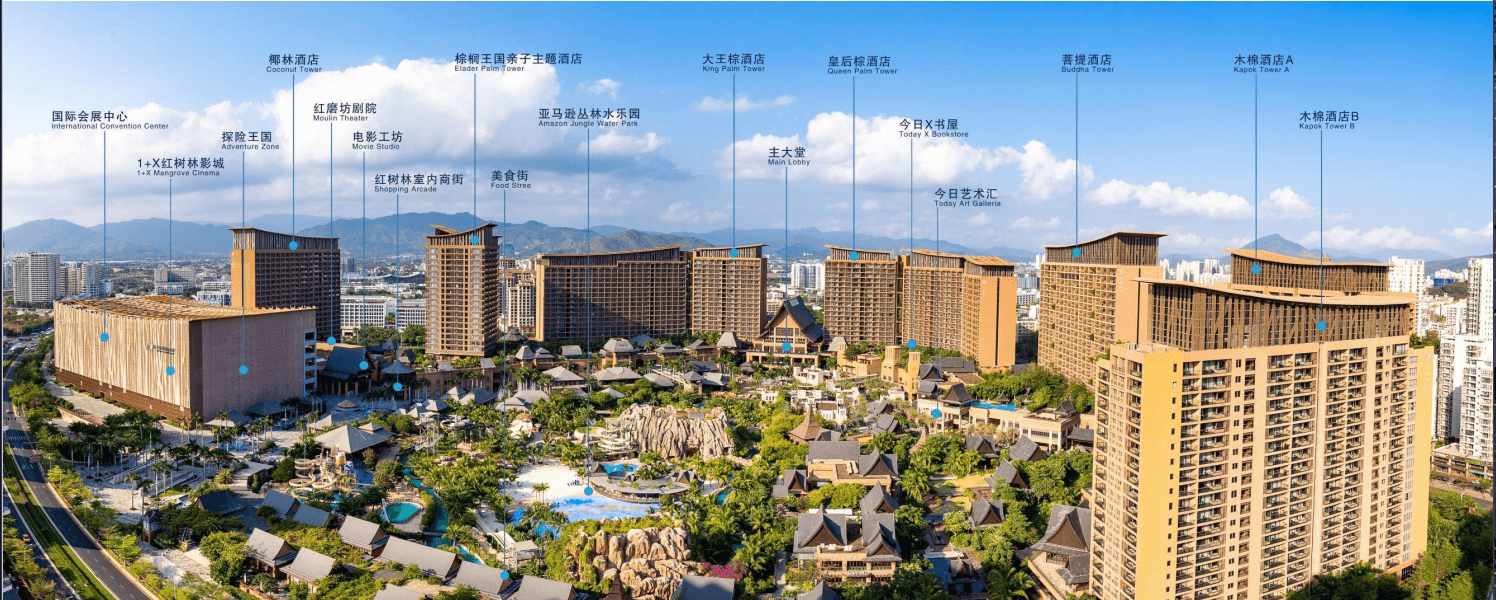

| 14:00-19:00 | Registration Location: Mangrove Tree Resort World Sanya Bay King Palm Tower | ||

| Day 1: Sunday, Dec. 3 Plenary Session | |||

| 8:00-8:10 | Opening Remarks, Vice President of Chinese Academy of Sciences | ||

| 8:10-8:20 | Opening Remarks, Local Government Official | ||

| 8:20-8:25 | Introduction to BenchCouncil Achievement Award (Video) | ||

| 8:25-8:30 |

Award Ceremony: 2023 BenchCouncil Achievement Award (Award and Keynote Chair: Prof. Jianfeng Zhan, Prof. D.K. Panda) |

||

| 8:30-9:10 |

Keynote 1 - Essentially, All Models Are Wrong, but Some Are Useful Prof. Lieven Eeckhout, Ghent University |

||

| 9:10-9:15 |

Introduction to BenchCouncil Rising Star Award (Video) Award Ceremony: 2023 BenchCouncil Rising Star Award (Award Chair: Prof. Jianfeng Zhan) |

||

| 9:15-9:20 | Opening Remarks of OpenCS 2023, Prof. Guangnan Ni and Prof. D.K. Panda | ||

| 9:20-9:25 |

Open100 (1960s-2021, RFC): A Centennial Edition of Top 100 Open Source Achievements (Video) (Award Chair: Dr. Lei Wang) |

||

| 9:25-9:30 |

Award Ceremony: Open100 (1960s-2021, RFC) -- Bruce Perens (Award and Keynote Chair: Dr. Lei Wang) |

||

| 9:30-10:10 |

Keynote 2 - Open Source: The Past, Present, and Future Bruce Perens, Co-founder of the Open Source Initiative |

||

| 10:10-10:15 | Opening Remarks of Bench 2023, Prof. Rakesh Agrawal and Prof. Aoying Zhou | ||

| 10:15-10:55 |

Keynote 3 - Designing High-Performance and Scalable Middleware and Benchmarks for HPC, AI, and Data Sciences Prof. D. K. Panda, The Ohio State University (Keynote Chair: Prof. Weining Qiang) |

||

| 10:55-11:00 |

Bench100 (1896-2021, RFC): A Centennial Edition of Top 100 Benchmarks & Evaluation Achievements (Video) (Award Chair: Dr. Chunjie Luo) |

||

| 11:00-11:05 | Opening Remarks of IC 2023, Prof. Tao Tang and Prof. Weiping Li) | ||

| 11:05-11:10 |

AI100 (1943-2021, RFC): A Centennial Edition of Top 100 AI Achievements (Video) (Award Chair: Dr. Wanling Gao) |

||

| 11:10-11:15 |

Award Ceremony: Xiangshan - Open100 (1960s-2021, RFC) Contributors: Prof. Yungang Bao, Dr. Dan Tang. (Award and Keynote Chair: Prof. Lieven Eeckhout) |

||

| 11:15:-11:55 |

Keynote 4 - New trend of processor: open source chips Prof. Yungang Bao, Institute of Computing Technology, Chinese Academy of Sciences |

||

| 11:55-12:00 | Opening Remarks of Chips 2023, Prof. Depei Qian | ||

| 12:00-12:05 |

Chip100 (1943-2021, RFC): A Centennial Edition of Top 100 Chips Achievements (Video) (Award Chair: Dr. Guoxin Kang) |

||

| 12:05-12:10 |

Award Ceremony: Chip100 (1940s-2021, RFC) --Steve Furber (Award and Keynote Chair: Dr. Guoxin Kang) |

||

| 12:10-12:20 |

Keynote 5 - Neuromorphic Computing Prof. Steve Furber, University of Manchester |

||

| Day 1: Sunday, Dec. 3 Plenary Session | |||

| 14:00-14:40 |

Keynote 6 - Creating Intelligent Cyberinfrastructure for Democratizing AI: Overview of the Activities at the NSF-AI Institute ICICLE Prof. D. K. Panda, The Ohio State University (Keynote Chair: Prof. Yanwu Yang) |

||

| 14:40-14:45 |

Award Ceremony: LSTM - AI100 (1943-2021, RFC) Contributors: Prof. Jürgen Schmidhuber (Award and Keynote Chair: Prof. Weiping Li) |

||

| 14:45-15:15 |

Keynote 7 - Correcting the first draft of the 'Top 100 AI Achievements' by IOBC Prof. Jürgen Schmidhuber, Faster of LSTM, Fellow of the European Academy of Sciences, Co-founder of NNAISENSE |

||

| 15:15-15:20 | Chip (2022-2023): An Annual Edition of Top 100 chips achievements | ||

| 15:20-15:25 |

Award Ceremony: OpenBLAS - Open100(1960s-2021, RFC) Contributors: Prof. Yunquan Zhang, Dr. Xianyi Zhang (Award and Keynote Chair: Bruce Perens) |

||

| 15:25-15:55 |

Keynote 8 - OpenBLAS: an optimized BLAS library based on GotoBLAS Dr. Xianyi Zhang, Founder of PerfXLab |

||

| 15:55-16:00 | Open (2022-2023): An Annual Edition of Top 100 open source achievements (Video) | ||

| 16:00-16:05 |

Award Ceremony: Hetu - Annual Achievement Selection Contributors: Prof. Bin Cui, et al. (Award and Keynote Chair: Dr. Biwei Xie) |

||

| 16:05-16:35 |

Keynote 9 - Hetu: Efficient and Scalable Distributed Deep Learning Systems Dr. Fangcheng Fu, Peking University |

||

| 16:35-16:40 | AI (2022-2023): An Annual Edition of Top 100 AI achievements (Video) | ||

| 16:40-16:45 |

Award Ceremony: Computational Storage Device - Annual Achievement Selection Contributors: Prof. Yaodong Cheng, et al. (Award and Keynote Chair: Prof. Ke Zhang) |

||

| 16:45-17:15 |

Keynote 10 - Computational Storage Device for Scientific Big Data Prof. Yaodong Cheng, Institute of High Energy Physics, Chinese Academy of Sciences |

||

| 17:15-17:20 | Bench (2022-2023): An Annual Edition of Top 100 benchmarks & evaluation achievements (Video) | ||

| 17:20-17:25 |

Award Ceremony: TDengine - Open100 (1960s-2021, RFC) Contributors: Jianhui Tao, et al. (Award and Keynote Chair: Dr. Sa Wang) |

||

| 17:25-17:55 |

Keynote 11 - Open Source and Cloud Services: A Dual Engine for Innovating Industrial Software Jianhui Tao, Founder of TAOS Data |

||

| 17:55-18:00 |

Award Ceremony: FastStream - Open100 (2022-2023) Contributors: Hajdi Cenan, Davor Runje. (Award and Keynote Chair: Prof. Zhifei Zhang) |

||

| 18:00-18:30 |

Keynote 12 - FastStream: a powerful and easy-to-use Python framework for building asynchronous services interacting with event streams Hajdi Cenan, Davor Runje (Airt.ai) |

||

| 18:50-21:00 | Banquet | ||

| Day 2, Monday, Dec. 4 | |||

| Chips Invited Talk Session1: New technologies and New methods I | |||

| 9:00-9:20 | Lieven Eeckhout | Ghent University | Decoupled Vector Runahead |

| 9:20-9:40 | Lieven Eeckhout | Ghent University | SAC: Sharing-Aware Caching in Multi-Chip GPUs |

| 9:40-10:00 | Qi Guo | ICT, CAS | Automatically Constrained High-Performance Library Generation |

| 10:00-10:20 | Ceyu xu | Duke | Fast Logic Synthesis Prediction with Deep Learning |

| 10:20-10:40 | Tea Break | ||

| OpenCS Invited Talk Session 1: High-performance HW/SW technology | |||

| 10:40-11:00 | Kaifan Wang, Yinan Xu | Beijing Institute of Open Source Chip, ICT CAS | Xiangshan: Open-source high-performance RISC-V processor |

| 11:00-11:20 | Hongbin Zhang | Institute of Software Chinese Academy of Sciences | BUDDY MLIR: An MLIR-based compiler framework designed for a co-design ecosystem from DSL to DSA |

| 11:20-11:40 | Zhiyi Wang | ICT, CAS | STEED: A High Performance Analytical Database for JSON |

| IC 2023 Invited talk | |||

| 8:30-9:00 | Zhu Tingshao (Institute of Psychology, Chinese Academy of Sciences) | Artificial Intelligence-Based Identification of Psychological and Psychiatric Illnesses | |

| IC 2023 Session 1 – AI for Finance, Civil Aviation | |||

| 9:00-9:20 | Sixian Chen(China University of Petroleum), Zonghu Liao(China University of Petroleum), Jingbo Zhang(China University of Petroleum) | Forecasting the price of Bitcoin using an explainable CNN-LSTM model | |

| 9:20-9:40 |

Xinlin Wang (University of Luxembourg) Mats Brorsson (University of Luxembourg) |

Augmenting Bankruptcy Prediction using Reported Behavior of Corporate Restructuring | |

| 9:40-10:00 |

LI LU (Civil Aviation Flight University of China) Juncheng Zhou (Civil Aviation Flight University of China) Chen Li (Civil Aviation Flight University of China) Yuqian Huang (Civil Aviation Flight University of China) Jiayi Nie (Civil Aviation Flight University of China) Junjie Yao (Civil Aviation Flight University of China) |

3D Approach Trajectory Optimization Based on Combined Intelligence Algorithms | |

| 10:00-10:20 | Jiahui Shen (Civil Aviation Flight University of China) | A-SMGCS: Innovation, Applications, and Future Prospects of Modern Aviation Ground Movement Management System | |

| 10:20-10:40 |

Lin Zou (CAFUC) Jingtao Wang (CAFUC) Weiping Li (CAFUC) Jianxiong Chen (CAFUC) |

Interpretable prediction of commercial flight delay duration based on machine learning methods | |

| IC 2023 Session 2 – AI for Medicine, Education | |||

| 10:40-11:00 |

Hongyu Kang (Institute of Medical Information, Chinese Academy of Medical Sciences and Peking Union Medical College) Qin Li (Department of Biomedical Engineering, School of Life Science, Beijing Institute of Technology) Jiao Li (Institute of Medical Information, Chinese Academy of Medical Sciences and Peking Union Medical College) Li Hou (Institute of Medical Information, Chinese Academy of Medical Sciences and Peking Union Medical College) |

KGCN-DDA: a knowledge graph based GCN method for drug-disease association prediction | |

| 11:00-11:20 |

Geng Gao (School of Biomedical Engineering) Yunfei He (School of Biomedical Engineering) Li Meng (School of Biomedical Engineering) Jinlong Shen (School of Biomedical Engineering) Lishan Huang (School of Biomedical Engineering) Fengli Xiao (Department of Dermatology of First Affiliated Hospital, and Institute of Dermatology) Fei Yang (School of Biomedical Engineering) |

Label-independent Information Compression for Skin Diseases Recognition | |

| 11:20-11:40 |

Luning Sun (University of Cambridge) Hongyi Gu (Netmind.ai) Rebecca Myers (University of Cambridge) Zheng Yuan (King's college London) |

A new dataset and method for creativity assessment using the alternate uses task | |

| Bench 2023 Session 1:Paper Session | |||

| 9:00- 9:20 | Zhenying Li | ICT, CAS | ICBench: Benchmarking Knowledge Mastery in Introductory Computer Science Education |

| 9:20- 9:40 | Xiuyu Jiang | Sun Yat-sen University | MolBench: A Benchmark of AI Models for Molecular Property Prediction |

| 9:40-10:00 | Along Mao | Computer Network Information Center(CNIC), Chinese Academy of Sciences | MMDBench: A Benchmark for Hybrid Query in Multimodal Database |

| 10:00-10:20 | Fei Tang | UCAS | AGIBench: A Multi-granularity, Multimodal, Human-referenced, Auto-scoring Benchmark for Large Language Models |

| 10:20-10:40 | Rohit P. Singh | University of Cincinnati | Generating High Dimensional Test Data for Topological Data Analysis |

| 10:40-11:00 | Yatao Li | Microsoft Research | Does AI for science need another ImageNet Or totally different benchmarks? A case study of machine learning force fields |

| 11:00-11:20 | Fan Zhang | ICT, CAS | Cross-layer profiling of IoTBench |

| 11:20-11:40 | Dhabaleswar K. Panda | The Ohio State University | Benchmarking Modern Databases for Storing and Profiling Very Large Scale HPC Communication Data |

| 11:40-12:00 | Yikang Yang | UCAS | A Linear Combination-based Method to Construct Proxy Benchmarks for Big Data Workloads |

| 9:00-12:00 | Workshop:Talents Education and Development for Open-source Computer Systems and Chips | ||

| Day 2, Monday, Dec. 4 | |||

| OpenCS Invited Talk Session 2: AI and LLM | |||

| 14:00-14:20 | Yang Liu | Alibaba | FaceChain: a deep-learning toolchain for generating your Digital-Twin |

| 14:20-14:40 | Li Jiang, Wei Zheng | Microsoft | AutoGen: Enabling Next-Gen AI Applications via Multi-Agent Conversation |

| 14:40-15:00 | Ya Luo | Peking University | CraneSched: An Intelligent Scheduling System |

| 15:00-15:20 | Hongliang Jiang | Meituan | YOLOv6: a single-stage object detection framework dedicated to industrial applications |

| 15:20-15:40 | Tea Break | ||

| OpenCS Invited Talk Session 3: Frameworks and tools for emerging applications | |||

| 15:40-16:00 | Wenli Zhang | ICT, CAS |

Open-source user-space network stack and 3 test tools for C10M high concurrency |

| 16:00-16:20 | Yingjie Jiang, Ran Mo | Central China Normal University | A Comprehensive Study on Code Clones in Automated Driving Software |

| 16:20-16:40 | Yuanjia Zhang | PingCAP | TiDB: an open-source, cloud-native, distributed, MySQL-Compatible database for elastic scale and real-time analytics |

| 16:40-17:00 | Yue Jin | ICT, CAS |

DASICS: Enhancing Memory Protection with Dynamic Intra-Address Space Bounds |

| 17:00-17:20 | Xiaoya Xia | X-Lab | OpenDigger: An open source analysis report project |

| 17:20-17:40 | Jianwei Zhu | Huawei | openEuler: Bringing new opportunities to the diversified computing era |

| 17:40-18:00 | Tsinghua University | IoTDB: Internet of Things Database for Time-series Data | |

| Chips Invited Talk Session 1: New technologies and New methods II | |||

| 14:00-14:20 | Qinggang Wang | Huazhong University of Science and Technology | Memory Access Optimization Mechanism of FPGA-based Graph Computing Accelerator |

| 14:20-14:40 | Yishan Wang | SIAT,CAS | Closed-loop Brain-Computer Interface Chip |

| 14:40-15:00 | XiaoFeng Hou | Shanghai Jiao Tong University | AMG Multi-modal computing unit |

| 15:00-15:20 | XingJun Wang | Peking University | Microcomb-driven silicon photonic systems |

| 15:20-15:40 | Tea Break | ||

| Chips Invited Talk Session 2: New architectures | |||

| 15:40-16:00 | GuangMing Tang, Haihang You | ICT, CAS | SUSHI Superconductor Chip |

| 16:00-16:20 | Ke Zhang | ICT, CAS | SERVE: Agile Cloud Hardware/Software Development Platform for Computer Systems Course Experiments |

| 16:20-16:40 | Xueqi Li | ICT, CAS | Specialized Accelerators for High-Performance Domains and Cross-Layer Performance Optimization |

| 16:40-17:00 | Junnan Li | National University of Defense Technology | Intelligent Network Processor for Deterministic and In-Network Computing |

| IC 2023 Session 3 – AI for Law | |||

| 14:00-14:20 |

Weijie Huang (Shenzhen University) Xi Chen (corresponding author) (Shenzhen University) |

A Levy Scheme for User-Generated-Content Platforms and its Implication for Generative AI Providers | |

| 14:20-14:40 | Jiaxing Li (Nankai University) | Moving Beyond Text: Leveraging Multi-modal Data to Enhance AI's Understanding of Legal Reasoning through Rebuttals | |

| 14:40-15:00 |

Laitan Ren (The Law School of Hainan University) Jingjing Wu (The Law School of Hainan University) |

The Worldwide Contradiction of the AIGC Regulatory Theory Paradigm and China’s Response: Focus on the Theories of Normative Models and Regulatory Systems | |

| 15:00-15:20 |

Qun Wang (Shanghai Maritime University) Shuhao Qian (Shenzhen Research Institute of Big Data) Jiahuan Yan (East China University of Political Science and Law) |

Learning Deep Features for Trademark Law Prediction Based on TF-IDF and XGBoost | |

| 15:20-15:40 | Yicheng Liao(Zhejiang University) | Review of Big Data Evidence in Criminal Proceedings: Basis of Academic theory, Practical Pattern and Mode Selection | |

| IC 2023 Session 4 – AI for Ocean, Space | |||

| 15:40-16:00 |

Kai Wang (Ocean University of China) Guoqiang Zhong (Ocean University of China) |

Diffusion Probabilistic Models for Underwater Image Super-Resolution | |

| 16:00-16:20 |

Libin Du (Shandong University of Science and Technology) Mingyang Liu (Shandong University of Science and Technology) Zhichao Lv (Shandong University of Science and Technology) Zhengkai Wang (Shandong University of Science and Technology) Lei Wang (Shandong University of Science and Technology) Gang Wang (Shandong University of Science and Technology) |

Classification Method for Ship-Radiated Noise Based on Joint Feature Extraction | |

| 16:20-16:40 |

Yun-Long Li (National Space Science Center, Chinese Academy of Sciences) Ci-Feng Wang (National Space Science Center, Chinese Academy of Sciences) Jia Zhong (National Space Science Center, Chinese Academy of Sciences) Yang Lu (National Space Science Center, Chinese Academy of Sciences) |

Semantic retrieval of Mars data using contrastive learning and convolutional neural network | |

| 16:40-17:00 | Sun Xian, Fu Kun, Diao Wenhui, Yan Zhiyuan, Feng Yingchao, Wang Peijin, Zhang Wenkai, Li Jihao | RingMo: A Remote Sensing Foundation Model with Masked Image Modeling | |

| 17:00-17:20 |

Shaofeng Fang (National Space Science Center, Chinese Academy of Sciences) Jie Ren (School of Earth and Space Sciences, Peking University) |

Machine Learning Techniques for Automatic Detection of ULF waves | |

| IC 2023 Session 5 – AI for Edge computing | |||

| 17:20-17:40 | Guo Xiaoqi, Chen Weineng, Wei Fengfeng, Mao Wentao, Hu Xiaomin, Zhang Jun | Edge–Cloud Co-Evolutionary Algorithms for Distributed Data-Driven Optimization Problems | |

| 17:40-18:00 | Li Feng | Edge computing operating system: Seaway Edge | |

| Bench 2023 Session 2:Paper Session | |||

| 14:00-14:20 | Zechun Zhou | University of Science and Technology of China | Automated HPC Workload Generation Combining Statistic Modeling and Autoregressive Analysis |

| 14:20-14:40 | Wanling Gao | ICT, CAS | Edge AIBench 2.0: A scalable autonomous vehicle benchmark for IoT–Edge–Cloud systems |

| 14:40-15:00 | Jiahui Xu | ICT, CAS | StreamAD: A cloud platform metricsoriented benchmark for unsupervisedonline anomaly detection |

| Bench 2023 Invited Talk Session 1: Benchmark and Dataset | |||

| 14:40-15:00 | Zhiyuan Yan | ICT, AIR | FAIR1M datasets |

| 15:00-15:20 | Weineng Chen | South China University of Technology | Specification for Reliability evaluation of swarm intelligent optimization algorithms |

| 15:20-15:40 | Tea Break | ||

| 15:40-16:00 | Xiaofei Yan | The Institute of High Energy Physics of the Chinese Academy of Sciences | Juno workloads |

| 16:00-16:20 | Xiangnan Zhao | National Institute of Metrology of China | Storage capacity & performance benchmark |

| 16:20-16:40 | Huihui Fang | Sun Yat-sen University,South China University of Technology | iChallenge datasets |

| 16:40-17:00 | Tongyu Liu | East China Normal University | Hperf: Efficient Cross-platform Multiplexing of Hardware Performance Counters via Adaptive Grouping |

| 17:00-17:20 | Yuwei Gao | NBSDC | Governance and Sharing of high-quality scientific data in basic fields in China |

| Day 3, Tuesday, Dec. 5 | |||

| OpenCS Invited Talk Session 4: High Performance Computing and Analysis | |||

| 9:00-9:20 | Weile Jia | ICT, CAS | DeePMD: A deep learning package for many-body potential energy representation and molecular dynamics |

| 9:20-9:40 | Ting Cao | Microsoft | ArchProbe: a profiling tool to demythify and quantify mobile GPU architectures |

| 9:40-10:00 | Zhuolun Jiang | ICT, CAS |

Asynchronous Memory Access Unit: Exploiting Massive Parallelism for Far Memory Access |

| 10:00-10:20 | Xiaoyi Lu | The Ohio State University | PMIdioBench: Understanding the Idiosyncrasies of Real Persistent Memory |

| 10:20-10:40 | Tea Break | ||

| Chips Invited Talk Session 3: Accelerator | |||

| 10:40-11:00 | Zhigao Zheng | Wuhan University | Path Merging Based Betweenness Centrality Algorithm on GPU |

| 11:00-11:20 | Mingzhe Zhang | IIE,CAS | Fully Homomorphic Acceleration Based on GPGPU |

| 11:20-11:40 | Liwei Guo | University of Electronic Science and Technology of China | A 50KB Machine Learning GPU Stack |

| 11:40-12:00 | Junsheng Lai | KINGTIGER TESTING | Complete Memory Aging Technology |

| IC 2023 Session 6 - AI for High Energy Physics, Materials | |||

| 9:00-9:20 |

Jiameng Zhao (Zhengzhou University) Zhengde Zhang (Chinese Academy of Science) Fazhi Qi (Chinese Academy of Science) |

An Intelligent Image Segmentation Annotation Method Based on SAM Large Model | |

| 9:20-9:40 |

Yutao Zhang (Zhengzhou University) Yaodong Cheng (Institute of High Energy Physics, Chinese Academy of Sciences) |

ParticleNet for Jet Tagging in Particle Pyhsics on FPGA | |

| 9:40-10:00 |

Cen Mo (Shanghai Jiao Tong University) Fuyudi Zhang (Shanghai Jiao Tong University) Liang Li (Shanghai Jiao Tong University) |

Neutrino Reconstruction in TRIDENT Based on Graph Neural Network | |

| 10:00-10:20 |

Jiayi Xu (Institute of High Energy Physics, Chinese Academy of Sciences) Peng Kuang (Institute of High Energy Physics, Chinese Academy of Sciences) Fuyan Liu (Institute of High Energy Physics, Chinese Academy of Sciences) Xingzhong Cao (Institute of High Energy Physics, Chinese Academy of Sciences) Baoyi Wang (Institute of High Energy Physics, Chinese Academy of Sciences) Haiying Wang (China Univ Geosci Beijing, Sch Sci) Peng Zhang (Institute of High Energy Physics, Chinese Academy of Sciences) |

Application of Machine Learning-Based Neural Networks in Positron Annihilation Spectroscopy Data Analysis | |

| 10:20-10:40 |

Hideki Okawa (Institute of High Energy Physics, Chinese Academy of Sciences) Michael James Fenton (University of California, Irvine) Alexander Shmakov (University of California, Irvine) Yuji Li (Fudan University) Ko-Yang Hsiao (National Tsing Hua University) Shih-Chieh Hsu (University of Washington, Dept. of Physics) Daniel Whiteson (University of California, Irvine) Pierre Baldi (University of California, Irvine) |

Symmetry Preserving Attention Networks for resolved top quark and Higgs boson reconstruction at the LHC | |

| 10:40-11:00 | Hideki Okawa (Institute of High Energy Physics, Chinese Academy of Sciences) | Quantum Tracking for Future Colliders | |

| 11:00-11:20 | Abdualazem Fadol Mohammed (Institute of High Energy Physics) | The prospect of quantum machine learning algorithms in High Energy Physics | |

| 11:20-11:40 |

Zejia Lu (Shanghai Jiao Tong University) Xiang Chen (Shanghai Jiao Tong University) Jiahui Wu (Shanghai Jiao Tong University) Yulei Zhang (Shanghai Jiao Tong University) Liang Li (Shanghai Jiao Tong University) |

Application of Graph Neural Networks in Dark Photon Search with Visible Decays at Future Beam Dump Experiment | |

| IC 2023 Session 7 – AI for Algorithm, Security, and System | |||

| 9:00-9:20 |

Jinyuan Luo (Guangzhou University) Linhai Xie (National Center for Protein Sciences (Beijing)) Hong Yang (Guangzhou University) Xiaoxia Yin (Guangzhou University) Yanchun Zhang (Guangzhou University) |

Machine Learning for time-to-event prediction and survival clustering: A review from statistics to deep neural networks | |

| 9:20-9:40 |

Shuran Lin (Beijing Jiaotong University) Chunjie Zhang (Beijing Jiaotong University) Yanwu Yang (Huazhong University of Science and Technology) |

Second-Order Gradient Loss Guided Single-Image Super-Resolution | |

| 9:40-10:00 |

Severin Reiz (Technical University of Munich) Keerthi Gaddameedi (Technical University of Munich) Tobias Neckel (Technical University of Munich) Hans-Joachim Bungartz (Technical University of Munich) |

Efficient and Scalable Kernel Matrix Approximations using Hierarchical Decomposition | |

| 10:00-10:20 | Guilan Li | The implementation and optimization of FFT based on the MT-3000 chip SAR imaging | |

| 10:20-10:40 |

Haojia Huang (School of Data and Computer Science, Sun Yat-sen University) Pengfei Chen (School of Data and Computer Science, Sun Yat-sen University) Guangba Yu (School of Data and Computer Science, Sun Yat-sen University) |

EDFI: Endogenous Database Fault Injection with a Fine-Grained and Controllable Method | |

| 10:40-11:00 | Zhou Shijie, Liu Qihe, Wu Chunjiang, Wu Zhewei, Zeng Yi, Qiu Shilin, Zhang Zhun, Zhou Ling, Liu Haoyu, Wang Junhan, Yu Ruilong, Gou Min, Liang Tao, Pan Haolan | Artificial Intelligence Security Detection Platform | |

| 11:00-11:20 |

Siqi Shi (School of Materials Science and Engineering, Shanghai University) Hailong Lin (School of Materials Science and Engineering, Shanghai University) Zhengwei Yang (School of Computer Engineering and Science, Shanghai University) Linhan Wu (School of Computer Engineering and Science, Shanghai University) Yue Liu (School of Computer Engineering and Science, Shanghai University) |

Predicting activation energy of Li-containing compounds with graph neural network | |

| 11:20-11:40 |

Zongxiao Jin (Shanghai University of Engineering Science) Yu Su (Shanghai University of Engineering Science) Jun Li (Shanghai University of Engineering Science) Huiwen Yang (Shanghai University of Engineering Science) Jiale Li (Shanghai University of Engineering Science) Huaqing Fu (Shanghai Eraum Alloy Materials Co.,Ltd.) Zhouxiang Si (Shanghai Eraum Alloy Materials Co.,Ltd.) Xiaopei Liu (Shanghai Eraum Alloy Materials Co.,Ltd.) |

Convolutional Graph Neural Networks for Predicting Enthalpy of Formation in Intermetallic Compounds Using Continuous Filter Convolutional Layers | |

| Bench 2023 Invited Talk Session 2: Benchmark and Dataset | |||

| 9:00- 9:20 | Niklas Muennighoff | Hugging Face | MTEB: Massive Text Embedding Benchmark |

| 9:20- 9:40 | Zirui Hu, Siyang Weng | East China Normal University | Lauca: Generating Application-Oriented Synthetic Workloads |

| 9:40-10:00 | Yucong Duan | Hainan University | Evaluating Large Language Models |

| 10:00-10:20 | Yue Zhou | Shanghai Jiao Tong University | MMRotate: A Rotated Object Detection Benchmark using PyTorch |

| 10:20-10:40 | Baojin Huang | Wuhan University | Masked face recognition dataset and application |

| 10:40-11:00 | Chenxi Wang | UCAS | DPUBench: An application-driven scalable benchmark suite for comprehensive DPU evaluation |

| 11:00-11:20 | Jindong Wang,Kaijie Zhu | Microsoft | PromptBench: Towards Evaluating the Robustness of Large Language Models on Adversarial Prompts |

| 11:20-11:40 | Fenglin Bi | East China Normal University | OpenPerf: Infrastructure and application test and analysis framework |

| 11:40-12:00 | Guoxin Kang | ICT, CAS | OLxPBench: Real-time, Semantically Consistent, and Domain-specific are Essential in Benchmarking, Designing, and Implementing HTAP Systems |

| 12:00-12:20 | Qi Zhang | Tsinghua University | AIPerf: Automated Machine Learning as an AI-HPC Benchmark |

| Day 3, Tuesday, Dec. 5 | |||

| Chips Invited Talk Session 4: Design Method | |||

| 14:00-14:20 | David Schall | The University of Edinburgh | Warming Up a Cold Front-End with Ignite |

| 14:20-14:40 | Jin Zhao | Huazhong University of Science and Technology | TDGraph: Topology-driven Streaming Graph Processing Accelerator |

| 14:40-15:00 | Ke Zhang | ICT, CAS |

REMU: Enabling Cost-Effective Checkpointing and Deterministic Replay in FPGA-based Emulation |

| 15:00-15:20 | Zhongcheng Zhang | ICT, CAS | SIMD co-processor |

| 15:20-15:40 | Tea Break | ||

| OpenCS Invited Talk Session 5: Big Data and Platform | |||

| 15:40-16:00 | Yongming Wei | FMSoft | HVML: A new-style and easy-to-learn programming language |

| 16:00-16:20 | Lei Zou | Peking University | gStore: a graph based RDF triple store |

| 16:20-16:40 | Xiaojie Zhu | Computer Network Information Center, Chinese Academy of Sciences | PiFlow: an easy to use, powerful big data pipeline system |

| 16:40-17:00 | Huaqian Cai | Peking University | BDOA and BDWare: Internet of Data Based on Digital Object Architecture and Big Data Interoperability Technology |

| 17:00-17:20 | TAL Education Group | pyKT: A Python Library to Benchmark Deep Learning based Knowledge Tracing Models | |

| 17:20-17:40 | Yenan Tang | X-Lab | Hypercrx: A browser extension for insights into GitHub projects and developers |

| 17:40-18:00 | Fenglin Bi | East China Normal University | OpenLeaderboard: A Window to Open Source Dynamics |

| IC 2023 Session 8 – AI applications 1 | |||

| 14:00-14:20 | Fanda Fan | Hierarchical Masked 3D Diffusion Model for Video Outpainting | |

| 14:20-14:40 | Wang Haifeng, Wu Tong, Yang Kun | Express delivery order generation model based on deep learning | |

| 14:40-15:00 | Hao Qinfen, Liu Jing | Knowledge distillation | |

| 15:00-15:20 | Chen Zugang, Feng Hang, Cai Kuangsheng | Spatiotemporal big data computing | |

| 15:20-15:40 | Chen Zugang | Research on the construction method of earth observation knowledge hub based on knowledge graph | |

| IC 2023 Session 9 – AI applications 2 | |||

| 15:40-16:00 | Fan Yiqun, Liu Fang, Gao Ying, Sun Shengting, Shao Changyu, Zhang Li | Intelligent operation and maintenance management and control platform for urban municipal facilities | |

| 16:00-16:20 | Zhuang Lilin | Cartoonist | |

| 16:20-16:40 | Mohd Javaid | ChatGPT for healthcare services: An emerging stage for an innovative perspective | |

| 16:40-17:00 | Abid Haleem | An era of ChatGPT as a significant futuristic support tool: A study on features, abilities, and challenges | |

| 17:00-17:20 | Partha Pratim Ray | Benchmarking, ethical alignment, and evaluation framework for conversational AI: Advancing responsible development of ChatGPT | |

| Bench 2023 Session 3:Paper Session | |||

| 14:00-14:20 | Lichen Jia | ICT, CAS | ERMDS: A obfuscation dataset for evaluating robustness of learning-based malware detection system |

| 14:20-14:40 | Wenjing Liu; Xiaoshuang Liang | Guangxi Normal University | Algorithmic fairness in social context |

| 14:40-15:00 | Hainan Ye | UCAS | MetaverseBench: Instantiating and benchmarking metaverse challenges |

| 15:00-15:20 | Chunjie Luo | ICT, CAS | HPC AI500 V3.0: A scalable HPC AI benchmarking framework |

| 15:20-15:40 | Tea Break | ||

| 15:40-16:00 | Fei Tang | UCAS | SNNBench: End-to-end AI-oriented spiking neural network benchmarking |

| 16:00-16:20 | Simin Chen | Zhongguancun National Laboratory | IoTBench: A data centrical and configurable IoT benchmark suite |

| 16:20-16:40 | Yuyang Li; Ning Li | East China Normal University | Hmem: A Holistic Memory Performance Metric for Cloud Computing |

| 16:40-17:00 | Zhengxin Yang | ICT, CAS | Quality at the Tail of Machine Learning Inference |

| 17:00-17:20 | Sang Wook Stephen Do | Futurewei Technologies | Enabling Reduced Simpoint Size Through LiveCache and Detail Warmu |

| 14:00-17:00 | Workshop:Information Superbahn | ||

| Day 4, Wednesday, Dec. 6 | |||

| Technical Achievements Evaluation Roundtable Forum | |||

| 9:00-10:00 | Achievement Technology Evaluation Discussion | ||

| 10:00-11:00 | Issue Certificate | ||

| Registration type | Early bird ticket (By November 25th) |

Full fare ticket (After November 25th) |

|

|---|---|---|---|

| General registration attendees are entitled to an additional meal ticket benefit for children under 12 years old. | |||

| International Registration(Accepted papers must use the International registration) | Standard registration including paper publication | US $690.00 | US $760.00 |

| Simple registration including paper publication ( No food and beverage included ) | US $530.00 | US $580.00 | |

| Standard registration not including paper publication | US $490.00 | US $550.00 | |

| Simple registration not including paper publication ( No food and beverage included ) | US $320.00 | US $360.00 | |

| Student registration including paper publication | US $420.00 | US $480.00 | |

| Simple student registration including paper publication (No food and beverage included) | US $260.00 | US $280.00 | |

| Standard student registration not including paper publication | US $280.00 | US $320.00 | |

| Simple student registration not including paper publication ( No food and beverage included ) | US $200.00 | US $230.00 | |

| Group Registration |

Registration method: Please transfer to the following account and send attendees' information (first name, last name, email address, institution) to benchcouncil@bafst.com. Once receiving the registration fees, the registration team will check the information and help finish the group registration. Account Name: HONG KONG AI AND CHIP BENCHMARK RESEARCH LIMITED Account Number: 801-670811-838 Account Address: 6/F Manulife Place, 348 Kwun Tong Road, Kowloon, Hong Kong. Bank Name: The Hongkong and Shanghai Banking Corporation Limited Bank Address: HSBC Main Building, 1 Queen's Road Central, Central, Victoria City, Hong Kong. SWIFT Code: HSBCHKHHHKH |

5 to 10 attendees, 5% discount | |

| 11 to 15 attendees, 10% discount | |||

| 16 to 20 attendees, 15% discount | |||

| Registration Website: | https://eur.cvent.me/5qQEq | ||

| About the refund policy:

1.If canceled by 10/31/2023, the amount refunded would be 100%. 2.If canceled by 11/15/2023, the amount refunded would be 80%. 3.Non-refundable after 11/15/2023. 4.No refund will be provided if the registrant is the only author of an accepted paper who has registered for the conference. Note that multiple papers cannot share the same registration ID. |

|||

Sanya Bay Mangrove Tree Resort World is situated in the future administrative center of Sanya Bay, renowned for its Coconut Dream Corridor. Embracing the innovative planning concept of "around the river with multi-business," this resort complex in China seamlessly integrates the finest accommodations, exhibitions, business facilities, leisure activities, entertainment options, and shopping experiences, all boasting cutting-edge design. Regardless of whether guests are families, corporate travelers, conference attendees, parents with children, or honeymooners, they will discover their own personalized "world" within this exceptional destination.

Address: No.155 Fenghuang Road, Tianya District, Sanya, HainanCoconut Tower Traditional (45 square meters, single-bed, single-breakfast): CNY ¥400.00/Day

Coconut Tower Traditional (45 square meters, double-bed, double-breakfast): CNY ¥480.00/Day

King Palm Tower Traditional (53 square meters, single-bed, single-breakfast): CNY ¥480.00/Day

King Palm Tower Traditional (53 square meters, double-bed, double-breakfast): CNY ¥530.00/Day

King Palm Tower Suites (offer double breakfast):CNY ¥950.00/Day

If you need assistance with hotel reservations or if you encounter any issues with your reservation, please contact liuyun@mail.bafst.com

Sanya Phoenix International Airport to Venue, distance 10 kilometers, about fee CNY ¥40.00